Evolution & Behaviour

Evolution & Behaviour

Tidings from Before the Flood: how Artificial Intelligence Rediscovers Ancient Babylonian Texts

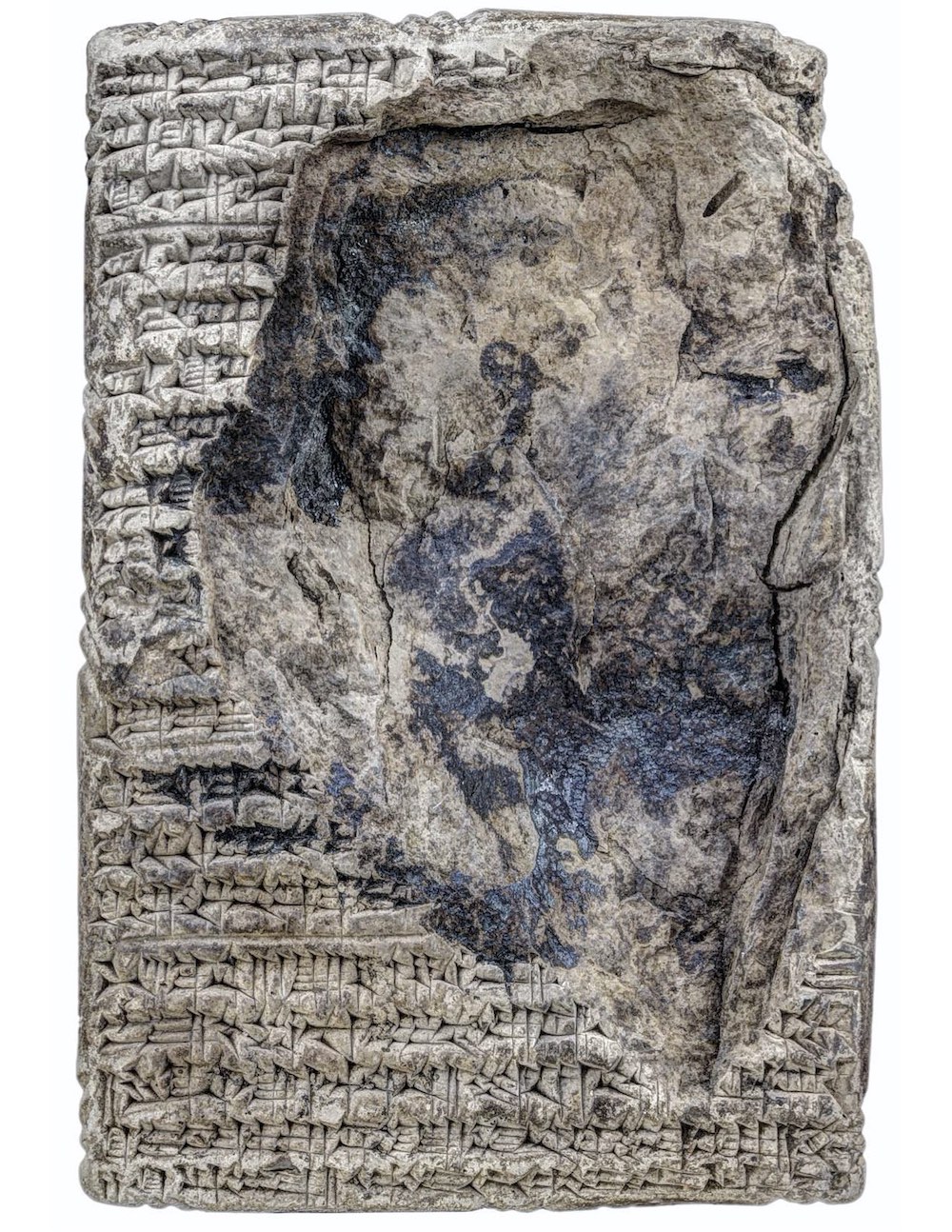

Texts written in cuneiform, the first writing system in the world, hold much information on the cultures of ancient Mesopotamia, the cradle of civilization. However, many of the clay tablets on which the texts were written are broken and fragmented. For this reason, we developed an artificial intelligence model to reconstruct the gaps in these ancient texts.

How much can we learn from our past? And how far back into the past can we draw connections between our predecessors and us? The ancient Mesopotamians, residents of the lands between the rivers Tigris and Euphrates in modern-day Iraq, believed wisdom came from before the flood: a mythological event, echoed in the Bible when the great gods decided to obliterate humanity. They accidentally left one survivor, named Atrahasis, Ziusudra, or Uta-napishtim (depending on the version). This event marked for the Mesopotamians the break between the mythological past and the reality in which they were living.

For us today, there is much wisdom to derive from the ancient Mesopotamians. They developed the first cities and empires. They established the sciences of astronomy and mathematics. They passed down literary works that influenced the Bible. Yet, until the rediscovery of their monuments and the decipherment of the cuneiform script, their contribution to world history was almost forgotten.

However, the hundreds of thousands of cuneiform clay tablets that have been excavated in the past 150 years, from which we gather most of our knowledge about ancient Mesopotamia, are not found in pristine condition. They are often broken, and their surfaces deteriorate with time, making characters illegible. To mitigate this issue, we decided to look to the future for the investigation of the past. We designed and trained an artificial intelligence model on a selection of ancient cuneiform texts to reconstruct the textual gaps.

We focused on Neo-Babylonian and Achaemenid archival documents: texts from the 6th-4th centuries BCE, written in the Babylonian dialect of Akkadian, containing economic, juridical, and administrative records. We used ca. 1,400 such texts available in digital, transliterated editions at the Achemenet project website (http://www.achemenet.com/). Our choice was guided by the genre since archival texts are very formulaic, which simplifies restoration. The primary model we used is a long short-term memory (LSTM) recurrent neural network. LSTM models are used for various applications, such as handwriting and speech recognition, translation, and more.

We designed two experiments to test the capabilities of our model. First, we took 520 complete sentences from the corpus and removed one to three words from the sentence. Then, we used the model to predict restorations for the missing words, ranking them by their likelihood. With one word missing, the model was able to predict correctly 85% of the times, and in 94% of the times, the correct answer was within the top ten options. When more words were missing, the models' performance dropped, but the right answer was still in the top ten options half the time.

The second experiment was designed to give us a qualitative estimation of the model. We generated 52 sentences in which one word is missing and provided the model four options to complete it. One incorrect answer was wrong semantically (the meaning of the word is wrong), one was wrong syntactically (the word does not fit the rules of sentence structure), and the third was both. The model failed to complete correctly in only six sentences, four being semantically incorrect, one syntactically incorrect, and the final both.

The results of our experiment are auspicious for the applicability of the model. Part of the work that scholars are faced with when preparing a text edition is filling in, as much as possible, the missing pieces by going through many similar texts. The high accuracy rate of the model can expedite that process by giving reliable options based on thousands of texts. Furthermore, the qualitative assessment shows that the model is most reliable with syntax and less so with semantics. This goes well with the human tendency to better understand semantics intuitively compared with syntax. Therefore, a human-machine collaboration would complement the strengths and weaknesses of each.

In the future, we plan to expand the training of the model on texts from additional time periods and genres, making it useful for the restoration of a larger number of cuneiform texts. This will not only reconstruct fragmentary texts, but also the bigger puzzle of the cultures of ancient Mesopotamia, and how much we are indebted to them. In the meantime, we have made the model available as an online tool for scholars at the Babylonian Engine website (https://babylonian.herokuapp.com/). We nicknamed our tool Atrahasis, after the legendary hero who survived the flood of mythical times, whose name literally means “beyond wisdom.”

Original Article:

Fetaya et al., Restoration of fragmentary Babylonian texts using recurrent neural networks. Proceedings of the National Academy of Sciences 117, 22743-22751 (2020).

Next read: Mathematical paradoxes unearth the boundaries of AI by Matthew J. Colbrook , Vegard Antun , Anders C. Hansen

Edited by:

Massimo Caine , Founder and Director

We thought you might like

The 1000-year-old mystery of a medieval blue solved!

Mar 3, 2021 in Maths, Physics & Chemistry | 3 min read by Maria J. Melo , Paula Nabais , Teresa Sequeira CarlosMore from Evolution & Behaviour

Cicada emergence alters forest food webs

Jan 31, 2025 in Evolution & Behaviour | 3.5 min read by Martha Weiss , John LillSize does not matter: direct estimations of mutation rates in baleen whales

Jan 29, 2025 in Evolution & Behaviour | 4 min read by Marcos Suárez-MenéndezThe Claws and the Spear: New Evidence of Neanderthal-Cave Lion Interactions

Jan 22, 2025 in Evolution & Behaviour | 3.5 min read by Gabriele RussoA deep-sea spa: the key to the pearl octopus’ success

Jan 20, 2025 in Evolution & Behaviour | 3.5 min read by Jim BarryFeisty fish and birds with attitude: Why does evolution not lead to identical individuals?

Aug 31, 2024 in Evolution & Behaviour | 3 min read by Lukas Eigentler , Klaus Reinhold , David KikuchiEditor's picks

Trending now

Popular topics